Chapter 9 Messy and Missing Data

You have to love the nice, complete datasets I have served up to you in this and other statistics texts. Indeed, some trials will work out this way – in general, the simpler the treatment (and the technology used to apply it) the greater your chances of a complete dataset. Planters will jam, nozzles will plug, and if a trial has 12 treatments a couple of them may end up in the wrong plot. Best to avoid those if possible.

As well, the smaller the experiment, or the more controlled the environment, the greater your odds of a complete dataset. For that reason, decades of university and industry research has been performed in 10- x 40- plots stacked closely together on manicured, table-top-flat ground. If you were a seed-breeder, you had dibs on these fields. If you were an ecologist like me, you might have to head to the back 40 (j/k).

If you can farm in your head or your basement, drop me a note and I will exempt you, with envy from this unit. For those of us who farm by the acre or section, however, the issue of data quality is an agonizing and sometimes subjective topic – but critical. Remember, most of our statistics are models, and the saying goes: “junk in, junk out.” Your models are only as good as the data you use to build them.

Part of your job as a researcher or end-user of any data, before you conduct or read the results from any test, is to ask yourself – are the data reliable enough to support the inferences? A trial with wildly-different experimental units may bias results – if all the replicates of one treatment end up in the better units and others are concentrated in the poorer ones. You may falsely recommend a product if you don’t catch this.

At the other extreme, the failure conduct research on similar experimental units will introduce background variance (or noise) that prevents a statistical test from concluding a difference is not the result of chance, even though the treatments are, in fact, different. In that case, you may fail to introduce – or adopt – a product or technology with real promise.

In this unit, we will first learn ways to inspect datasets for extreme values which, even given the inherent variability of data, may be suspect. Boxplots, histograms, and probability plots will be our tools for these exercises.

We will then address the uncomfortable question of what to do when we have missing data, either because a plot was compromised during the trial, or because we rejected that plot because its extreme value.

9.1 Inspecting data for Normal Distributions

I know, I know, it is so exciting to have data! The hard physical work of the research is done, the data is painstakingly entered into Excel. Let’s run the ANOVAs and regressions now – if we hurry we can still make it to happy hour!

It is so tempting to jump right into deep analyses as the data roll in. But it is important to realize these analyses are based on assumptions:

- That the observations for each treatment level are normally distributed around the treatment mean

- That the variance or spread of observations around each level of treatment is roughly equal.

- That experimental units are comparable among treatment levels, so that the treatment and error effects are appropriately separated.

It is very possible, based on the nature of trials, that one or more of these assumptions may be violated. If you have ever counted weeds in a herbicide trial, you have noted that well-treated plots have weed counts that are consistently near zero – but that weedy checks have wildly variable counts (100, 200, 300 weeds). Growth analysis (where plants of different sizes are measured) is also prone to messy data issues, because variance in measurements increases numerically as plants grow. Experimental units (plots) in both commercial and research farm fields can vary because of prior management unknown to the researcher.

9.1.1 Histograms

In the very first unit of this course Unit 2, you were introduced to the histogram. Recall the histogram is a vertical bar chart in which the width of bars defines the different ranges into which observations are grouped, and the height represents the count or proportion of observations falling into each range.

In the data below, we have a dataset with 500 observations of corn yield. The mean is approximately 180. We can see the data distribution is approximately normal.

The summary data are shown below. We can see that the median and mean are both approximately 180 bushels per acre. We can also see the 1st and 3rd quantiles (equal to the 25th and 75th percentiles) are a little over three bushels from the median. The minimum and maximum observations are also similarly spaced from the median.

| Min. | 1st Qu. | Median | Mean | 3rd Qu. | Max. |

|---|---|---|---|---|---|

| 164.8697 | 176.8281 | 180.1694 | 180.0362 | 183.3801 | 194.2372 |

When we are dealing with data such as pest counts, our data may be non-normal. Rather than being symmetrical, the data may be skewed to one side or another. For example, in the dataset below, total velvetleaf dry weight in grams per square meter was measured. If you have worked like me with weed populations, you realize weed competitiveness is all about outracing the crop to the sun. If the weed loses, which it will in most cases, it will be small. But the proud few weeds who beat the crop will be huge. That is reflected in the data below.

When we look at the histogram, the data are skewed to the right. The histogram is not symmetrical.

| Min. | 1st Qu. | Median | Mean | 3rd Qu. | Max. |

|---|---|---|---|---|---|

| 0.7386857 | 2.822542 | 5.053556 | 6.010417 | 8.333231 | 23.2785 |

When we look at the summary data, we first notice the mean and median are different. For a dataset this size (1000 observations, we would expect them to be more similar.) We notice that the first quantile is closer to the median than the third quantile. The greatest indication the data is skewed, however, is is that the minimum is about 4 plants less than the median, while the maximum is about 18 plants greater.

Data like this may be transformed (mathematically re-scaled) so that it is more normal for analyses. We will cover this below.

9.1.2 Rank Percentile Plots

Another way to inspect the normality of datasets is to use a rank percentile plot. This plot uses the percentile rank of each observation, from lowest to highest, as the x-value of each point. The y-value of the point is its observed value.

The data for our normally-distributed corn yield dataset are plotted in the rank percentile plot below. Normally-distributed data tend to be strongly linear in the middle of the plot. If we draw a regression line through the plot, you can see most of the data are close to that line. The lowest percentile points fall below the line. That means they are a little lower in value than the normal distribution function might predict. The opposite is true of the highest percentile points. This indicates our distribution is a little bit wider than normal, but not enough that we cannot use it for analysis.

Our skewed data, however, shows up quite differently in the rank percentile plot. We can see that most of the data closely fit a line. But starting around the 75th percentile, the observed values are much greater than the predicted values – almost twice as much. This means the distribution is much wider to the right of the distribution curve than to the left, and that the data are non-normal

9.1.3 Box Plots

The first two methods I have shown you, the histogram and rank percentile plots, are useful if you have a few treatments with a large number of replicates. They are taught in every statistics course and you should know about them. But, in my experience, they are not useful if you have a trial with fewer replications. A normal distribution is not going to appear in a histogram if you only have four replicates – instead you will just see the four individual measurements.

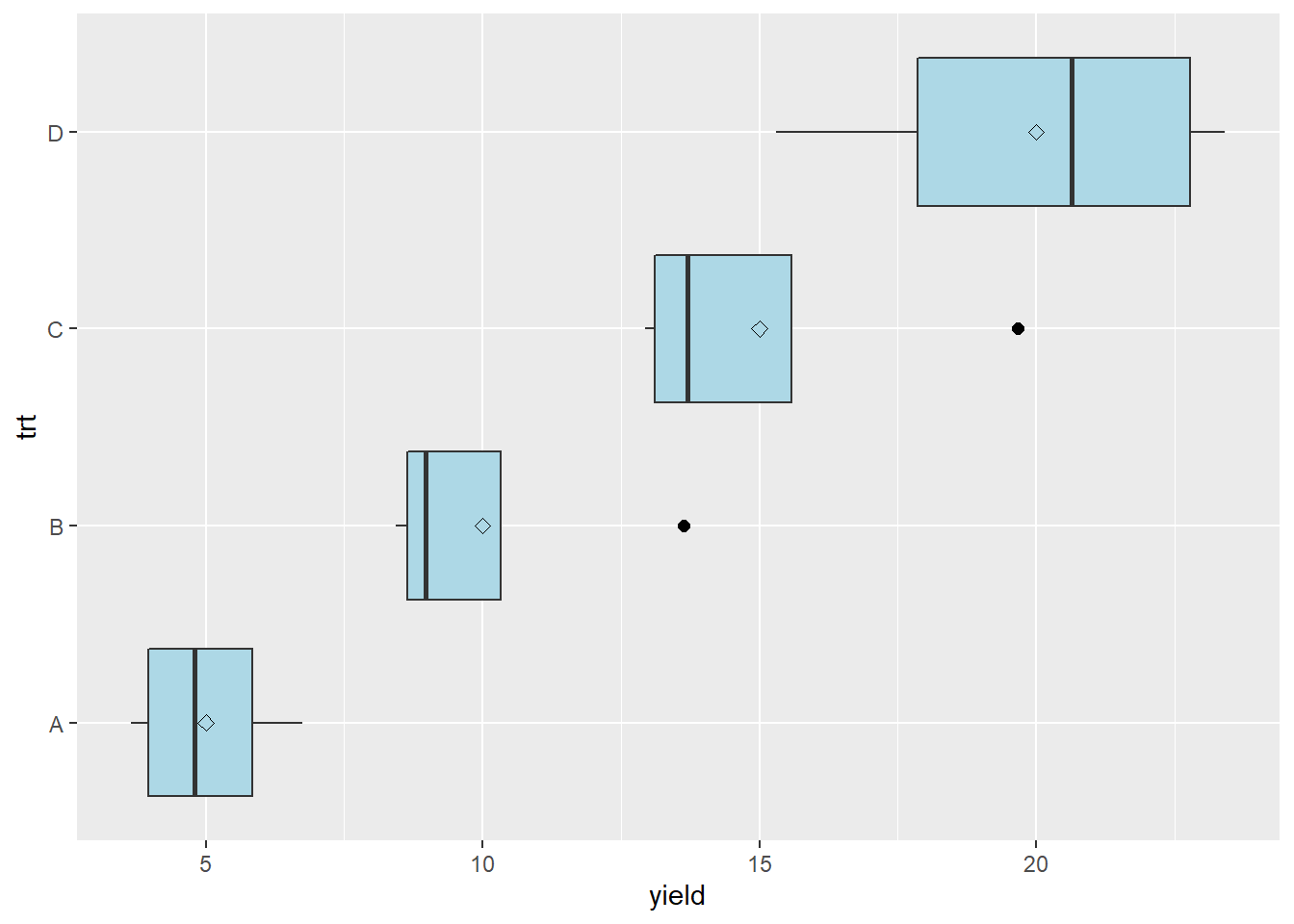

Box plots, on the other hand, are very useful for inspecting multiple treatments. In the plot below, boxplots for four corn treatments are shown. The treatments are labeled A, B, C, and D. The data are plotted so their treatments are listed along the vertical axis, and their values are listed along the y-axis.

The boxplots help us understand the distribution of the data. Lets start with the box, which tells about the spread of the data. The left side of the box is the 25th percentile, the line in the middle is the 50th percentile (or median), and the right side of the box is the 75th percentile. So the box shows us the spread of the middle half of the observations for each treatment.

The diamond shown within the box is the mean. In a normal distribution, the median and mean should be close.

The lines extending from the left and right side of the box are called whiskers. The whiskers extend to the lowest and highest observations for a treatment. The whiskers extend no more more than 1.5 times the inter-quartile range, which for the lower whisker is the the difference between the 25th and 50th percentiles, and for the upper whisker is the difference between the 50th and 75th percentiles.

In treatment B, we can see the upper whisker is missing, and instead there is a point to the right of the bar. If an observation is beyond 1.5 times the interquartile range, the whisker is not shown and the observation is instead represented by a point. This observation is called an outlier, meaning that it outside the range of values expected in a normal distribution. We will talk more about outliers in the next section.

The boxplot tells us something beyond the distribution of the individual treatments. If the boxes are markedly different in their width, the data may have substantially different variances. We should investigate these further using a mean-variance plot, and perhaps a statistical test of heterogeneity.

9.2 Inspecting Data for Equal Variances

So far, we have learned to use the t-test and analysis of variance to test named treatments (that is, hybrids, management practices, and other products that can be described by name). These tests generally assume not only that observed values are normally distributed, but that the variances are approximately equal among the different treatments in our experiment. If the variances are unequal, we may calculate least significant differences (LSDs) or honest significant differences (HSDs) that are inappropriate. Among treatments that have smaller variances, our LSD or HSD may be overestimated; among treatments that have larger variances, the LSD or HSD may be underestimated.

The visual inspection of individual treatment distributions in the box plot above, followed by a scatter plot of the treatment variances versus their means, can give us a visual sense of unequal variances. These suspicions can then be tested using a Test for Homogeneity that calculates the probability of differences in variances as greater as those observed.

9.2.1 Mean-Variance Plot

In a mean-variance plot, the treatment means are plotted along the horizontal axis and the variances are plotted along the vertical axis. The plot for the corn yield dataset we have used so far is shown below.

We can see the variance of treatment B is many times greater than that of the other treatments. In general, we like to see the variances differ by no more than a factor of 2.

In cases where we are dealing with populations that either “thrive or die” based on environment – particularly pest populations – we may see relationships between the mean and variance. Pest count data is often like this. In our velvetleaf counts, for example, we might find that our greater treatment means are also associated with greater variations in counts between plots.

In this case, the mean-variance plot may show a linear relationship between variance and mean.

Finally, we may observe a dataset in which the distributions not only increase with means, but seem to do so exponentially.

In this case, the mean-variance plot may show a curved relationship between variance and mean.

We may want to check whether the standard deviation, which is the square root of the variance, has a more linear relationship to mean.

The largest mean has a significant difference of 6.9, while the smallest mean has a significant difference of 0.3. In other words, the largest significant difference is 23 times the smallest significant difference.

The largest mean has a significant difference of 6.9, while the smallest mean has a significant difference of 0.3. In other words, the largest significant difference is 23 times the smallest significant difference.

9.2.2 Homogeneity of Variance Tests

In previous units, we learned how to compare two variances – using the F-test. In the Analysis of Variance, we tested whether the variance among treatment means was greater than the variance within treatments. If the treatment variance was sufficiently greater than the error variance, we concluded the treatment effect explained a significant amount of the variation in observed values.

In this unit, we want to do something similar – we want to compare the variances associated with multiple treatments to see if they are significantly different. When data are normally-distributed, the method for comparing multiple variances is Bartlett’s Test. (If you continue your statistical travels, you may come across Levene’s Test, but that is for non-normal data.)

Bartlett’s test, as best as I can tell (the formula is awful and no one seems willing to explain it), acts like a sum of squares for variances, comparing the variances of the individual treatments to their mean when pooled together. This is an incomplete explanation, but I hope it will satisfy the curious. Fortunately for us, the test is easy to implement in R and the output simple.

If we run Bartlett’s test on our corn data above, we get the following results.

##

## Bartlett test of homogeneity of variances

##

## data: yield by trt

## Bartlett's K-squared = 10.355, df = 3, p-value = 0.01578Let’s go through the output. “Bartlett’s K-squared” is the statistic produced by the nasty formula I referenced above. Don’t worry about that. The degrees of freedom refers to the four treatments whose variances we are comparing. Most important, of course, is our p-value. There are many opinions on when to transform data – but I would recommend against transforming data unless the –value is less than 0.01. I would also recommend running your data on both the transformed and untransformed data and comparing results. If transformation does not change your inferences, then skip it.

Here is the Bartlett’s test on our velevleaf data where the mean and standard deviation were linearly related:

##

## Bartlett test of homogeneity of variances

##

## data: yield by trt

## Bartlett's K-squared = 14.217, df = 3, p-value = 0.002625In this case, we will want to analyze both the transformed and untransformed data before deciding which to us for our final inferences.

9.3 Dealing with Messy Data

Dealing with messy data is one of the more uncomfortable aspects of statistics, but also one of the most important. Our tests and summary statistics are based on assumptions. For tests, we assume the data are from a populations that have approximately normal distributions. We also assume they have variances that are equal – otherwise our mean separation tests will fail.

And finally, and this is a concept that I learned just while writing this: the validity of our inferences is based on the assumption that our samples represent the population that we are studying. Which brings us back to outliers.

9.3.1 Outliers

Outliers can have a powerful effect in skewing data. Particularly in smaller datasets (i.e. fewer than 10 replicates per treatment), an outlier can have noticeable effects on a distribution’s normality and its variance. In regression analyses, one outlier can significantly change the slope of the model.

Does this mean that outliers should be omitted from the dataset? Not necessarily – first we should inspect the data more closely. The outlier might be a mistake in recording a measurement. It could be an inconsistent scale in a combine. These would be experimental errors that mean the outlier is an artifact of our methods, rather than a representative sample from our population. In that case, we may want to remove that observation from our dataset.

But investigating the outlier may also include examining the location where it was taken. This can be difficult if you are not the primary researcher and on-site where the data were gathered. But if you can overlay your plot map with a soils map, or work with aerial imagery, or examine as-applied maps, you may be able to identify differences in environment or management that caused a dramatic difference in the observed value.

In such a case, you may decide that plot did not represent the environment about which you were trying to draw inferences, and choose to omit it from the dataset. At the same time, however, knowing that the outlier’s environment or management had a dramatic effect on its performance, you may generate new hypotheses about that product. In fact, you may learn more from your outlier, through the new research it inspires, than you do from the original experiment.

Also, before removing an outlier, it is a good idea to run your tests with and without it to see whether it changes your conclusions. When you run your model, look at the standardized residuals. How many standard errors is the outlier from the predicted value? As a rule, if an observed value is more than two standard deviations from the predicted value, I scrutinize it before allowing it into the final analysis.

If you are comparing different treatments, does it change the significance of tests or differences among treatments? If you are generating a regression model, does the change in slope have a dramatic effect on the values you will predict? Will the change in slope have a dramatic effect on grower inputs and cost, or will the effect be more modest?

These are important questions, because as uncomfortable as it is to identify and/or remove outliers, working with incomplete datasets can be even nastier. If the statistical significance of tests or means separations are not affected by the outlier, it is best to leave it in the dataset if possible, especially if treatment replications are limited.

9.3.2 Non-normal Data and Unequal Variances

Above, we discussed two other problems with data: data that were skewed (or non-normal) and therefore could not be modelled based on the normal distribution, and data were treatment variances were not equal – they were, in statistical terminology, they suffered from heterogeneity of variances.

Both issues can arise out of trials where observation values vary widely: counts that include rare events or where one treatment (like a check plot) can “blow up” and have a huge value. Growth studies, where size increases occur at exponential rates, are another.

These two issues may be similarly addressed by transforming the data. When we transform data, we use a different measuring system to rescale it. The easiest example of rescaling data is pH. Recall pH is the concentration of hydrogen atoms in a solution. This concentration can range from \(0\) to \(10^{-14}\)

So when is the last time you read that your soil pH was \(6.5 \times 10^{-6}\) ? You wouldnt. We commonly speak of pH as ranging from 1 (highly acidic) through 7 (neutral) to 14 (highly basic). Your are used to using the logarithmic scale(10, 1, 0.10, 0.010), rather than the arithmatic scale (1,2,3,4,5). The decibel scale for sound and the Richter scale for earthquakes also use the logarithmic scale.

9.3.2.1 Natural Logarithm

There are several ways to transform data, but the one I have most-often used is the natural logarithm The natural logarithm transformation is often used when we are working with data that have a wide range of values. What constitutes a wide range of values? Think growth analysis, or counts of common events (for example, weed counts in a herbicide trial that includes treatments that vary widely in effectiveness). In these trials, it is not uncommon for observed values to vary by two or more orders of magnitude (powers of 10).

Our process for working with transformed data is as follows:

- Transform the original observations to their natural logs.

- Calculate the ANOVA

- Cacluate treatment means using transformed data

- Back-transform the treatment means to the original measurement scale so they are more intuitive to users

Lets work with our velvetleaf data above. Below is the analysis of variance of our initial, untransformed data:

| term | df | sumsq | meansq | statistic | p.value |

|---|---|---|---|---|---|

| trt | 3 | 500.00 | 166.6667 | 8.767315 | 0.0023712 |

| Residuals | 12 | 228.12 | 19.0100 | NA | NA |

| yield | groups | |

|---|---|---|

| D | 20 | a |

| C | 15 | ab |

| B | 10 | bc |

| A | 5 | c |

The treatment effect is significant, and some of the treatments are significantly different from each other, but there are also noticeable overlaps.

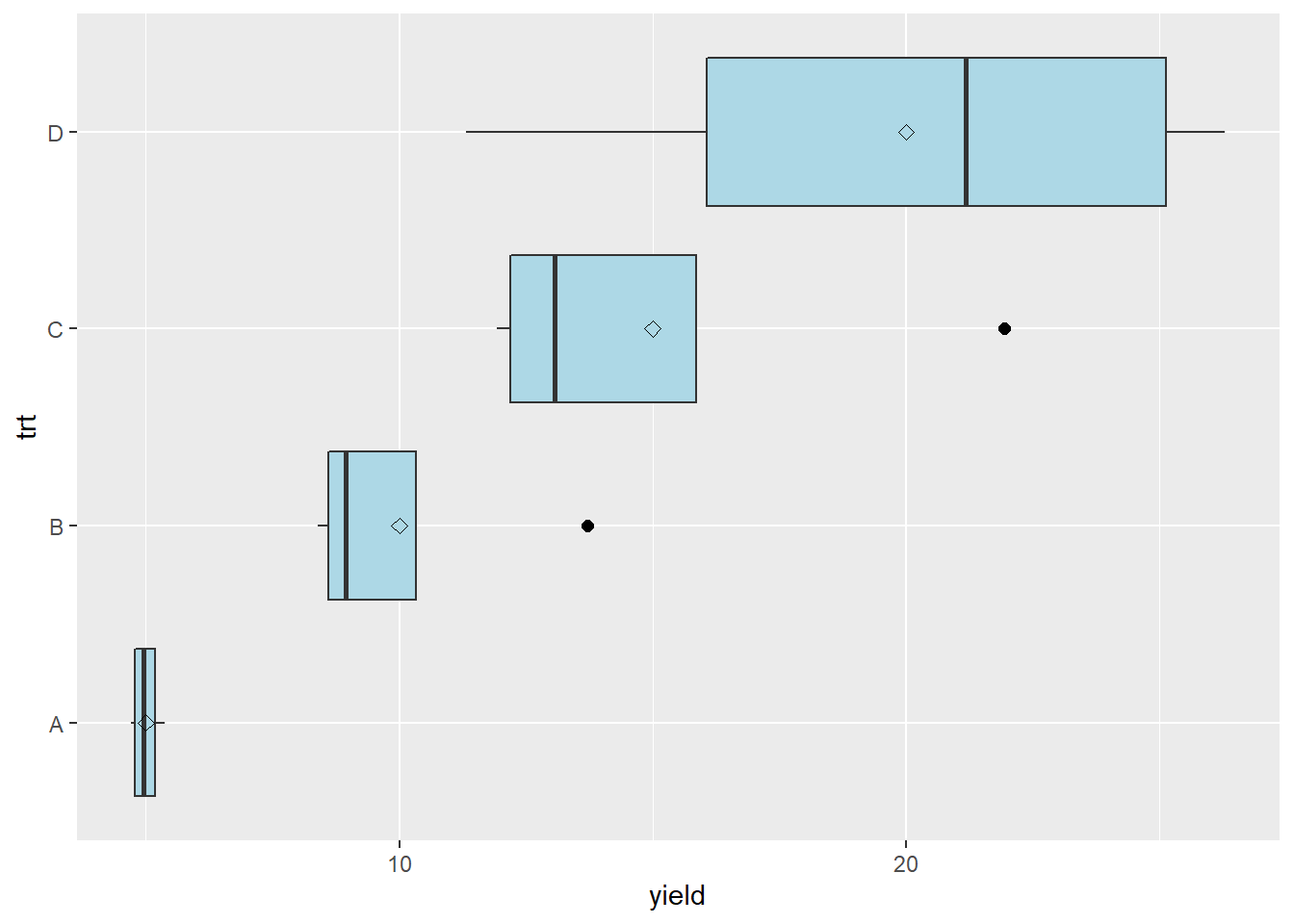

Now let’s transform the data using the natural log. The original and transformed data are show below.| trt | yield | log_yield |

|---|---|---|

| A | 4.802644 | 1.569167 |

| A | 5.113508 | 1.631886 |

| A | 5.369530 | 1.680740 |

| A | 4.714318 | 1.550604 |

| B | 9.211467 | 2.220449 |

| B | 8.401127 | 2.128366 |

| B | 8.671603 | 2.160054 |

| B | 13.715802 | 2.618549 |

| C | 11.933264 | 2.479330 |

| C | 21.939493 | 3.088288 |

| C | 13.841261 | 2.627654 |

| C | 12.285982 | 2.508459 |

| D | 11.328175 | 2.427293 |

| D | 26.292831 | 3.269296 |

| D | 24.739139 | 3.208387 |

| D | 17.639854 | 2.870161 |

Now when we run our Bartlett’s test, we see the p-value is 0.09 – there is no longer a significant difference among the treatment variances.

##

## Bartlett test of homogeneity of variances

##

## data: log_yield by trt

## Bartlett's K-squared = 6.4701, df = 3, p-value = 0.09085The largest standard deviation is still 6.5 times the smallest standard deviation – but the difference has decreased dramatically. When we run our analysis of variance, we see that our p-value has decreased by several orders of magnitude.

| term | df | sumsq | meansq | statistic | p.value |

|---|---|---|---|---|---|

| trt | 3 | 4.043462 | 1.347821 | 18.95189 | 7.58e-05 |

| Residuals | 12 | 0.853416 | 0.071118 | NA | NA |

| log_yield | groups | |

|---|---|---|

| D | 2.943784 | a |

| C | 2.675933 | ab |

| B | 2.281854 | b |

| A | 1.608099 | c |

Our last step is to back-transform the means from our LSD test. Each back-transformed mean is equal to Euler’s constant, \(e^x\), where x is each treatment mean in the transformed data.

| treatment | yield | groups |

|---|---|---|

| D | 18.987563 | a |

| C | 14.525893 | ab |

| B | 9.794826 | b |

| A | 4.993311 | c |

In the above table, we have back-transformed the means in our LSD table to their original scale.

9.4 Dealing with Missing Data

Missing data can be very problematic. Whether missing data are the result of deleting outliers, or plots lost to weather or human damage, there are three options:

- Drop the treatment entirely from the dataset

- Drop one observation from each of the other treatments; if a Randomized Complete Block Design was used, delete and entire block

- Predict the value for the plot that was omitted or lost

As you see, these are ugly choices to make. If you drop the treatment entirely from the dataset, you lose all ability to test it agaist the other treatments. If you drop other replicates or the remainder of a block, you retain all treatments but reduce the degrees of freedom for statistical tests, rendering them less sensitive.

The third option, predicting the value that is missing, comes with its own challenges. The missing value for a plot is generally calculated using the linear additive model. For a completely randomized design, the linear model is:

\[Y_{ij} = \mu + T_i + \epsilon_{(i)j} \]

So the missing value would be equal to \(\mu + Ti\), where \(i\) would be whatever treatment level the missing plot received.

In a randomized complete block design, the the linear additive model is:

\[Y_{ij} = \mu + B_i + T_j + BT_{ij} \]

The missing value would be equal to \(\mu + B_i + T_j\), where \(i\) is the block to which the missing plot occurred, and \(j\) is the treatment the treatment the missing plot received.

Note that we did not include \(\epsilon_{(i)j}\) or \(BT_{ij}\) in estimating the missing values. Although this approach is widely used, this is a shortcome. When we predict a missing value from the effects of treatment or treatment within block, we are using mean effects. So the predicted value will be exactly the mean for a given treatment or treatment within block. Because the predicted value is closer to the treatment and block means than it would be otherwise, it will contribute less to the treatment variance than it would normally.

We can demonstrate this with a normally-distributed dataset.

| plot | trt | yield |

|---|---|---|

| 1 | A | 166.8 |

| 2 | C | 169.9 |

| 3 | B | 170.6 |

| 4 | C | 174.9 |

| 5 | B | 161.8 |

| 6 | A | 168.3 |

| 7 | A | 169.2 |

| 8 | C | 159.9 |

| 9 | B | 160.8 |

| 10 | C | 166.2 |

| 11 | A | 160.3 |

| 12 | D | 172.1 |

| 13 | D | 175.4 |

| 14 | D | 175.3 |

| 15 | B | 170.6 |

| 16 | D | 172.2 |

Here is the anova for the original data:

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 166.150 | 2.352204 | 70.6358743 | 0.0000000 |

| trtB | -0.200 | 3.326519 | -0.0601229 | 0.9530474 |

| trtC | 1.575 | 3.326519 | 0.4734679 | 0.6443764 |

| trtD | 7.600 | 3.326519 | 2.2846705 | 0.0413279 |

And here is the boxplot:

Here is the table with the treatment and error effects broken out. Unlike previous effects tables, I have also added the within treatment variance.

| plot | trt | yield | mu | trt_var | trt_effect | error_effect |

|---|---|---|---|---|---|---|

| 1 | A | 166.8 | 168.3938 | 16.190000 | -2.24375 | 0.650 |

| 2 | C | 169.9 | 168.3938 | 39.922500 | -0.66875 | 2.175 |

| 3 | B | 170.6 | 168.3938 | 28.996667 | -2.44375 | 4.650 |

| 4 | C | 174.9 | 168.3938 | 39.922500 | -0.66875 | 7.175 |

| 5 | B | 161.8 | 168.3938 | 28.996667 | -2.44375 | -4.150 |

| 6 | A | 168.3 | 168.3938 | 16.190000 | -2.24375 | 2.150 |

| 7 | A | 169.2 | 168.3938 | 16.190000 | -2.24375 | 3.050 |

| 8 | C | 159.9 | 168.3938 | 39.922500 | -0.66875 | -7.825 |

| 9 | B | 160.8 | 168.3938 | 28.996667 | -2.44375 | -5.150 |

| 10 | C | 166.2 | 168.3938 | 39.922500 | -0.66875 | -1.525 |

| 11 | A | 160.3 | 168.3938 | 16.190000 | -2.24375 | -5.850 |

| 12 | D | 172.1 | 168.3938 | 3.416667 | 5.35625 | -1.650 |

| 13 | D | 175.4 | 168.3938 | 3.416667 | 5.35625 | 1.650 |

| 14 | D | 175.3 | 168.3938 | 3.416667 | 5.35625 | 1.550 |

| 15 | B | 170.6 | 168.3938 | 28.996667 | -2.44375 | 4.650 |

| 16 | D | 172.2 | 168.3938 | 3.416667 | 5.35625 | -1.550 |

Plot 8 has a greater error effect than most other plots. Let’s treat it as an outlier, delete it, and recalculate the treatment means. Let’s delete it and see how that changes the treatment effect for treatment C.

| plot | trt | yield | mu | trt_var | trt_effect | error_effect |

|---|---|---|---|---|---|---|

| 1 | A | 166.8 | 168.96 | 16.190000 | -2.810000 | 0.6500000 |

| 2 | C | 169.9 | 168.96 | 19.063333 | 1.373333 | -0.4333333 |

| 3 | B | 170.6 | 168.96 | 28.996667 | -3.010000 | 4.6500000 |

| 4 | C | 174.9 | 168.96 | 19.063333 | 1.373333 | 4.5666667 |

| 5 | B | 161.8 | 168.96 | 28.996667 | -3.010000 | -4.1500000 |

| 6 | A | 168.3 | 168.96 | 16.190000 | -2.810000 | 2.1500000 |

| 7 | A | 169.2 | 168.96 | 16.190000 | -2.810000 | 3.0500000 |

| 8 | C | NA | 168.96 | 19.063333 | 1.373333 | NA |

| 9 | B | 160.8 | 168.96 | 28.996667 | -3.010000 | -5.1500000 |

| 10 | C | 166.2 | 168.96 | 19.063333 | 1.373333 | -4.1333333 |

| 11 | A | 160.3 | 168.96 | 16.190000 | -2.810000 | -5.8500000 |

| 12 | D | 172.1 | 168.96 | 3.416667 | 4.790000 | -1.6500000 |

| 13 | D | 175.4 | 168.96 | 3.416667 | 4.790000 | 1.6500000 |

| 14 | D | 175.3 | 168.96 | 3.416667 | 4.790000 | 1.5500000 |

| 15 | B | 170.6 | 168.96 | 28.996667 | -3.010000 | 4.6500000 |

| 16 | D | 172.2 | 168.96 | 3.416667 | 4.790000 | -1.5500000 |

We can see that removing the yield data from plot 4 causes the treatment effect of treatment C to change – in fact, it has gone from negative to positive. The other treatment effects have also changed. The within-treatment variance for treatment C has also decreased by about one-third. When we re-run our analysis of variance, we see the treatment effect is 0.062 – almost significant at the P=0.05 level.

## Df Sum Sq Mean Sq F value Pr(>F)

## trt 3 165.3 55.09 3.294 0.0617 .

## Residuals 11 183.9 16.72

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## 1 observation deleted due to missingnessWhat would happen if we estimated the yield for plot 8 using the population mean, mu, and the treatment effect?

\[ Y_{3,4} = \mu + T_i = 168.96 + 1.37 = 170.33 \]

We see that mu, treatment variance, treatment effect, and error have again changed. The variance within Treatment 3 has again decreased by about one-third.| plot | trt | yield | mu | trt_var | trt_effect | error_effect |

|---|---|---|---|---|---|---|

| 1 | A | 166.80 | 169.0456 | 16.190000 | -2.895625 | 0.6500 |

| 2 | C | 169.90 | 169.0456 | 12.708892 | 1.286875 | -0.4325 |

| 3 | B | 170.60 | 169.0456 | 28.996667 | -3.095625 | 4.6500 |

| 4 | C | 174.90 | 169.0456 | 12.708892 | 1.286875 | 4.5675 |

| 5 | B | 161.80 | 169.0456 | 28.996667 | -3.095625 | -4.1500 |

| 6 | A | 168.30 | 169.0456 | 16.190000 | -2.895625 | 2.1500 |

| 7 | A | 169.20 | 169.0456 | 16.190000 | -2.895625 | 3.0500 |

| 8 | C | 170.33 | 169.0456 | 12.708892 | 1.286875 | -0.0025 |

| 9 | B | 160.80 | 169.0456 | 28.996667 | -3.095625 | -5.1500 |

| 10 | C | 166.20 | 169.0456 | 12.708892 | 1.286875 | -4.1325 |

| 11 | A | 160.30 | 169.0456 | 16.190000 | -2.895625 | -5.8500 |

| 12 | D | 172.10 | 169.0456 | 3.416667 | 4.704375 | -1.6500 |

| 13 | D | 175.40 | 169.0456 | 3.416667 | 4.704375 | 1.6500 |

| 14 | D | 175.30 | 169.0456 | 3.416667 | 4.704375 | 1.5500 |

| 15 | B | 170.60 | 169.0456 | 28.996667 | -3.095625 | 4.6500 |

| 16 | D | 172.20 | 169.0456 | 3.416667 | 4.704375 | -1.5500 |

And here is the kicker, wait for it…

## # A tibble: 2 x 6

## term df sumsq meansq statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 trt 3 167. 55.7 3.63 0.0450

## 2 Residuals 12 184. 15.3 NA NAOur treatment differences are now significant. Why? Because when we estimate a missing value using only the population mean and treatment effect, we decrease the overall variance. And why does that happen? Because we have now created that is almost exactly equal to the treatment mean. Was there a change in the originally observed values associated with this change in significance? No. And this is problem. But there is a way to reduce it.

The problem with the model we have used so far is we did not include the error effect in our yield estimate. If we added it in, our yield estimate for plot 8 would be more appropriate. Of course, we cannot calculate the error effect because it is random and changes among plots. But, knowing that error effects are normally distributed around the treatment mean, we can model that distribution and draw an individual from it at random, to use as the error effect in our estimate.

The error distribution has its own mean, which should be close to zero:

## [1] 1.894781e-15And its own standard deviation:

## [1] 3.624684Knowing these two parameters, we can select a value for our error effect from that distribution.

## [1] -5.36659Let’s plug that into our yield estimate and see how our statistics change.

\[ Y_{3,4} = \mu + T_i = 168.96 + 1.37 - 5.37 = 164.96 \]

We see that mu, treatment variance, treatment effect, and error have again changed. The variance within Treatment 3 has again decreased by about one-third.| plot | trt | yield | mu | trt_var | trt_effect | error_effect |

|---|---|---|---|---|---|---|

| 1 | A | 166.80 | 168.71 | 16.190000 | -2.56 | 0.65 |

| 2 | C | 169.90 | 168.71 | 19.927067 | 0.28 | 0.91 |

| 3 | B | 170.60 | 168.71 | 28.996667 | -2.76 | 4.65 |

| 4 | C | 174.90 | 168.71 | 19.927067 | 0.28 | 5.91 |

| 5 | B | 161.80 | 168.71 | 28.996667 | -2.76 | -4.15 |

| 6 | A | 168.30 | 168.71 | 16.190000 | -2.56 | 2.15 |

| 7 | A | 169.20 | 168.71 | 16.190000 | -2.56 | 3.05 |

| 8 | C | 164.96 | 168.71 | 19.927067 | 0.28 | -4.03 |

| 9 | B | 160.80 | 168.71 | 28.996667 | -2.76 | -5.15 |

| 10 | C | 166.20 | 168.71 | 19.927067 | 0.28 | -2.79 |

| 11 | A | 160.30 | 168.71 | 16.190000 | -2.56 | -5.85 |

| 12 | D | 172.10 | 168.71 | 3.416667 | 5.04 | -1.65 |

| 13 | D | 175.40 | 168.71 | 3.416667 | 5.04 | 1.65 |

| 14 | D | 175.30 | 168.71 | 3.416667 | 5.04 | 1.55 |

| 15 | B | 170.60 | 168.71 | 28.996667 | -2.76 | 4.65 |

| 16 | D | 172.20 | 168.71 | 3.416667 | 5.04 | -1.55 |

When we looks at the ANOVA, we see that the mean square and p-value are approximately the same as they were before the missing value was interpolated.

## Df Sum Sq Mean Sq F value Pr(>F)

## trt 3 158.6 52.87 3.086 0.068 .

## Residuals 12 205.6 17.13

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1In R, there is a nice package called mice that does this for us. We will learn about it in an exercise this week.

One final note, however: we should not interpolate missing values if 5% or more of the data are missing. Why? Because, as we have seen above, that interpolated value can markedly change our interpretation of the data. Restricting interpolation to datasets where a small percentage of data are missing reduces the leverage one observation has on conclusions from the data. It also increases the accuracy of the interpolated values.

In smaller datasets, then, we may to use the approaches above. How important is it to have that treatment in the trial, versus losing a replicaton of the other treatments? For the latter option, you may want to test whether including or omitting that replicate changes your conclusion from the data. If not, it may be easiest to drop the replication.

9.5 Summary

Agronomic data are rarely nice and neat. The scale in which we work (acres and hectares), plus the variations in soil types, equipment performance, weather, weeds and other pests, make it virtually impossible to ensure our experimental units are near-equivalent. Above all, our subjects are alive and integrate every aspect of their environment into their growth. Unlike human subjects, corn plants cannot answer a history questionnaire. Months or years of a trial can be erased by one plugged nozzle, one broken singulator, one strong wind, one “white combine”. It’s a nasty business.

It is important that we inspect our data and consider its shortcomings. We have ways to address these shortcomings. Outliers may be trimmed, or we may use other techniques to overcome them. We have also learned how to re-scale data (using logarithms or square roots) so that variances are closer to equal, and how to “fill in” missing values using imputation so that our datasets can be balanced.

If stuck, consult with other data scientists. There are “robust” statistics that are based on using the median, rather than the mean, for summaries and tests. There are also non-parametric tests, some of which we will be introduced to towards the end of this course. Non-parametric methods don’t use linear models – therefore, they are more insulated from the problems discussed above. We will learn about these in a future unit.